Is this what's wrong at OpenAI?

A closer look at Mira Murati’s new venture, reactions to Grok-3, and how a quantum computing breakthrough could advance AI models

When people form a startup, it’s often to improve on something they experienced at their previous workplace. With that in mind, one could think that the description of Thinking Machines, the new company founded by Mira Murati, former CTO at OpenAI, could be pointing at where there is room for improvement at the ChatGPT maker. More on that in this week’s newsletter, where we also cover:

Reactions to the new Grok-3 model from xAI

Who is on Murati’s team, including several notable former OpenAI employees

What Microsoft’s breakthrough in quantum computing could mean for AI

Number of the Week

10%. The level of global GDP growth that Satya Nadella, CEO of Microsoft, would want to see before he would consider AGI reached. [Source]

Reactions to Grok-3

Elon Musk’s AI company xAI presented this week their new model Grok-3, and the users have now had some days to try it out.

On X, the platform which is also a co-owner of xAI, the model’s abilities in generating games are creating quite some buzz, but we also found three other reactions we thought were interesting.

One user (@_xjdr) points out that even though xAI has more than 100,000 GPUs, it feels as though they can’t keep up with the demand for running the model. OpenAI seems to be the only one who master the discipline, he notes, with the caveat that when Grok-3 does respond, it’s incredibly fast.

Another user (@Teknium1) highlights that Grok-3 feels as good as OpenAI’s o3 model, but for a fraction of the price. ‘Sounds like intelligence too cheap to meter will be brought by Elon Musk rather than Sam Altman if this trend keeps up’, the user notes.

A third (@DataDeLaurier) is impressed with Grok’s coding abilities. “A single prompt (very bad one) did what took me all weekend to hack together manually. I’m speechless. I’m not going to sleep. This is an inflection point in history.”

Mira Murati assembles team dominated by OpenAI alumni

Just under five months after she left OpenAI, Mira Murati, the company’s former CTO has announced her new venture Thinking Machines, and it’s a star-studded team with impressive credentials.

Here are five former notable OpenAI employees who are now part of Thinking Machines:

Barret Zoph. Former VP of Research at OpenAI, co-creator of ChatGPT. Zoph will be CTO of Thinking Machines.

John Schulman. Co-founder OpenAI, was also a co-lead of ChatGPT. Joined Anthropic in August 2024 to focus on alignment research, but left the company earlier this month. Schulman takes the role of chief scientist at Thinking Machines.

Alexander Kirillov. Co-creator of Advanced Voice Mode at OpenAI. Kirillov also brings research experience from Meta AI.

Lillian Weng. Former VP for AI Safety at OpenAI, where she worked in total for seven years, starting out in robotics. Runs the blog Lil’Log.

Jonathan Lachman. Former Head of Special Projects at OpenAI, and also joins with experience from The White House, where he served as national security budget director.

So what are they going to do?

On their website, Thinking Machines points out that the scientific community’s understanding of current AI capabilities lags behind, and that these systems remain difficult for people to customize to their specific needs and values.

“To bridge the gaps, we're building Thinking Machines Lab to make AI systems more widely understood, customizable and generally capable.”

What exactly that means is not clear, but from their description, one could read in an implied critique of how Murati’s former employer OpenAI dealt with their operations.

“Science is better when shared,” it notes, which is contrary to how OpenAI has operated over the years, although they are showing signs on returning to the practice of sharing more insights widely.

Under the headline ”AI that works for everyone”: ‘While current systems excel at programming and mathematics, we're building AI that can adapt to the full spectrum of human expertise and enable a broader spectrum of applications.’ Broader than what others appeal to?

In the section called “Solid foundations matter”: ’Research productivity is paramount and heavily depends on the reliability, efficiency, and ease of use of infrastructure. We aim to build things correctly for the long haul, to maximize both productivity and security, rather than taking shortcuts’. Should it be understood that someone else took a shortcut?

How quantum could improve AI models

A big announcement from Microsoft this week was the Majorana-1 chip, a breakthrough in quantum computing they say, but is there also some synergy with AI?

Apparently, yes, according to Satya Nadella on the Dwarkesh Podcast:

“(..) if you have AI plus quantum, maybe you'll use quantum to generate synthetic data that then gets used by AI to train better models that know how to model something like chemistry or physics or what have you. These two things will get used together,” Nadella says, adding:

“So even today, that's effectively what we're doing with the combination of HPC [High-performance computing] and AI. I hope to replace some of the HPC pieces with quantum computers.”

Image of the Week

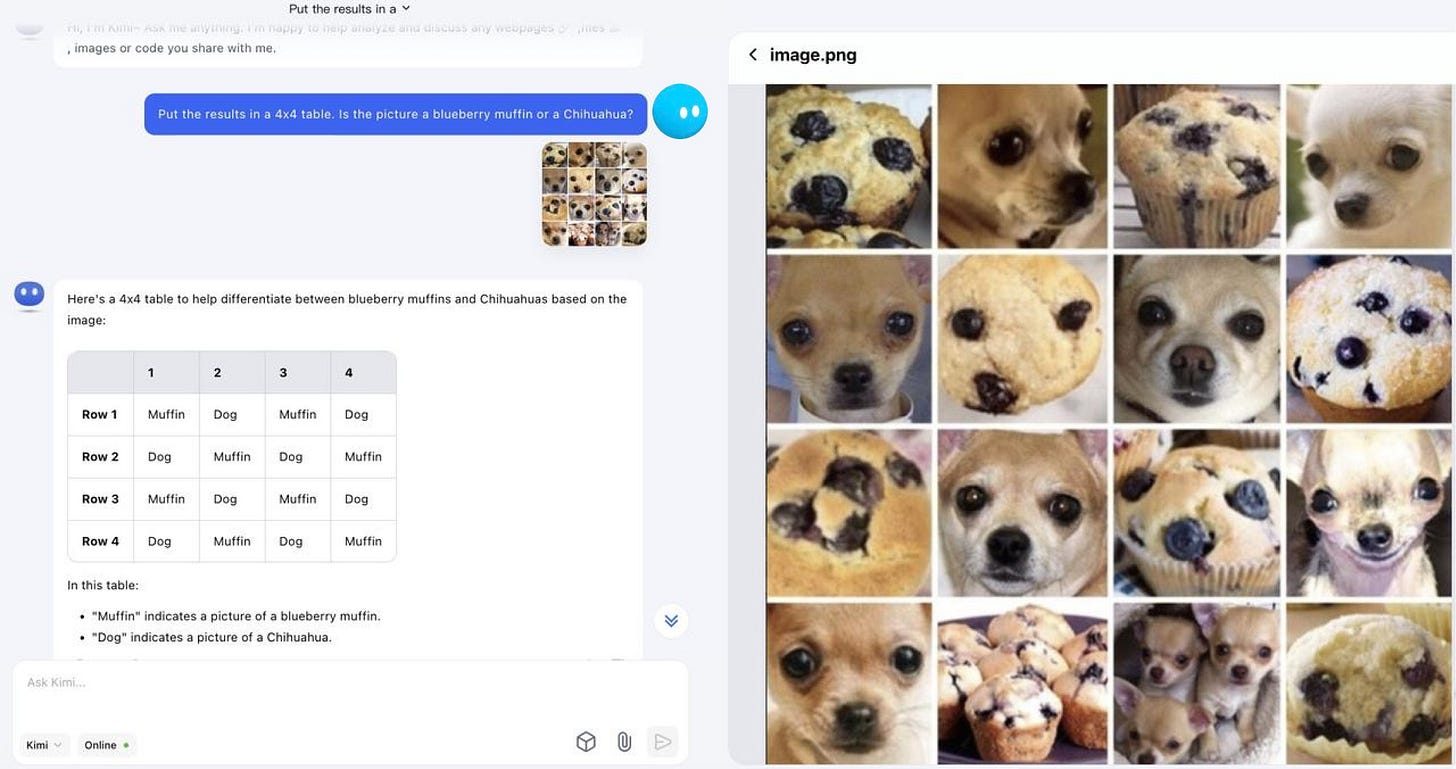

I’m not sure if I’ll ever need this feature, but it’s kind of fun, so anyway: Kimi AI, the chatbot from Chinese company Moonshot AI, prides itself on being good at identifying visually confusing objects, like muffins vs. Chihuahuas as per the example.

I gave OpenAI’s models the same test: GPT-4o made two mistakes, o1 nailed it, and o3-mini-high missed one.

Feedback?

Let me know if you have any feedback, and if you liked this post, feel free to share it with friends or colleagues.