Google achieves ‘unprecedented’ results in AI-simulating animal behavior

Also: Korean students surprise with new voice model, virtual employees next year, and more.

A bit quieter week than usual this year, but still some interesting developments that could have important ramifications in the future. And also, DeepSeek is rumored to come out with a new strong model next week, so it could be the quiet before the storm.

Number of the Week

15%. The chance of current large language models being conscious, according to Kyle Fish, researcher at Anthropic. [Source]

Google achieves ‘unprecedented’ results in AI-simulating animal behavior

In an attempt to help scientists better understand how the brain, body, and environment together drive behavior in animals, Google has developed a new AI simulation system that they hope can help advance discoveries not possible in real-life labs.

In a partnership with HHMI Janelia, a research center in the US, Google DeepMind has now managed to simulate how a fruit fly walks, flies, and behaves.

They digitally constructed a detailed model of the fly with 67 body parts, then customized the MuJoCo physics simulator with fly features like mimicking the gripping force of the insect’s feet.

Then they trained a neural network on real fly behavior from videos.

”This enables it to learn how to move the virtual insect in the most realistic way,” Google writes.

In a preprint paper of the work, the team behind notes that this enhanced understanding of the interplay between the brain, body and environment could also help advance the knowledge in the human realm.

“It has recently been proposed that understanding the brain requires embodied models of the nervous system capable of generating an ethologically relevant repertoire of behavior,” they write, with reference to a paper from 2023 by, among others, renowned scientists like Yoshua Bengio, Jeff Hawkins, and Yann LeCun.

“This NeuroAI approach is enabled with unprecedented spatial and temporal detail by our body model and imitation-learning framework.”

Next up for the simulation researchers is the zebrafish, a creature which reportedly shares 70% of its protein-coding genes with humans.

Korean students develop voice model that exceeds performance of big labs

Two students from South Korea set out to build a rival to Google’s NotebookLM, ElevenLabs, and Sesame, but with more control.

After three months of work they released the model, Dia, from their company Nira Labs.

“Somehow… we pulled it off,” Toby Kim, one of the two, notes in a viral tweet, which also emphasizes that the duo have not received external funding. ElevenLabs and Sesame, for comparison, have raised $281 million and $47.5 million respectively.

The reception of the model is impressive: Dia is now number one trending on Hugging Face, and has amassed more than 12,300 stars on GitHub.

Their differentiator, Kim writes on Hacker News, is that unlike other text-to-speech models that generate each speaker turn and stitch them together, Dia generates the entire conversation in a single pass.

“This makes it faster, more natural, and easier to use for dialogue generation.”

Some impressive demos here. And it is free to try here.

Anthropic: AI employees are one year away

There is much talk about AI agents these days, but now Anthropic is putting spotlight on what could be thought of as the next generation: virtual employees. And these are expected to be seen in the next year, the company warns in a report with Axios.

Virtual employees would be entities with memories, roles in the company, corporate accounts and passwords and a level of autonomy higher than agents, it is noted.

"In that world, there are so many problems that we haven't solved yet from a security perspective that we need to solve," Jason Clinton, Anthropic’s chief information security officer, says.

Examples could be what network the virtual employees should have access to and who would be responsible for managing its actions, he added.

Solutions could be something that provide visibility into what an AI employee account is doing on a system.

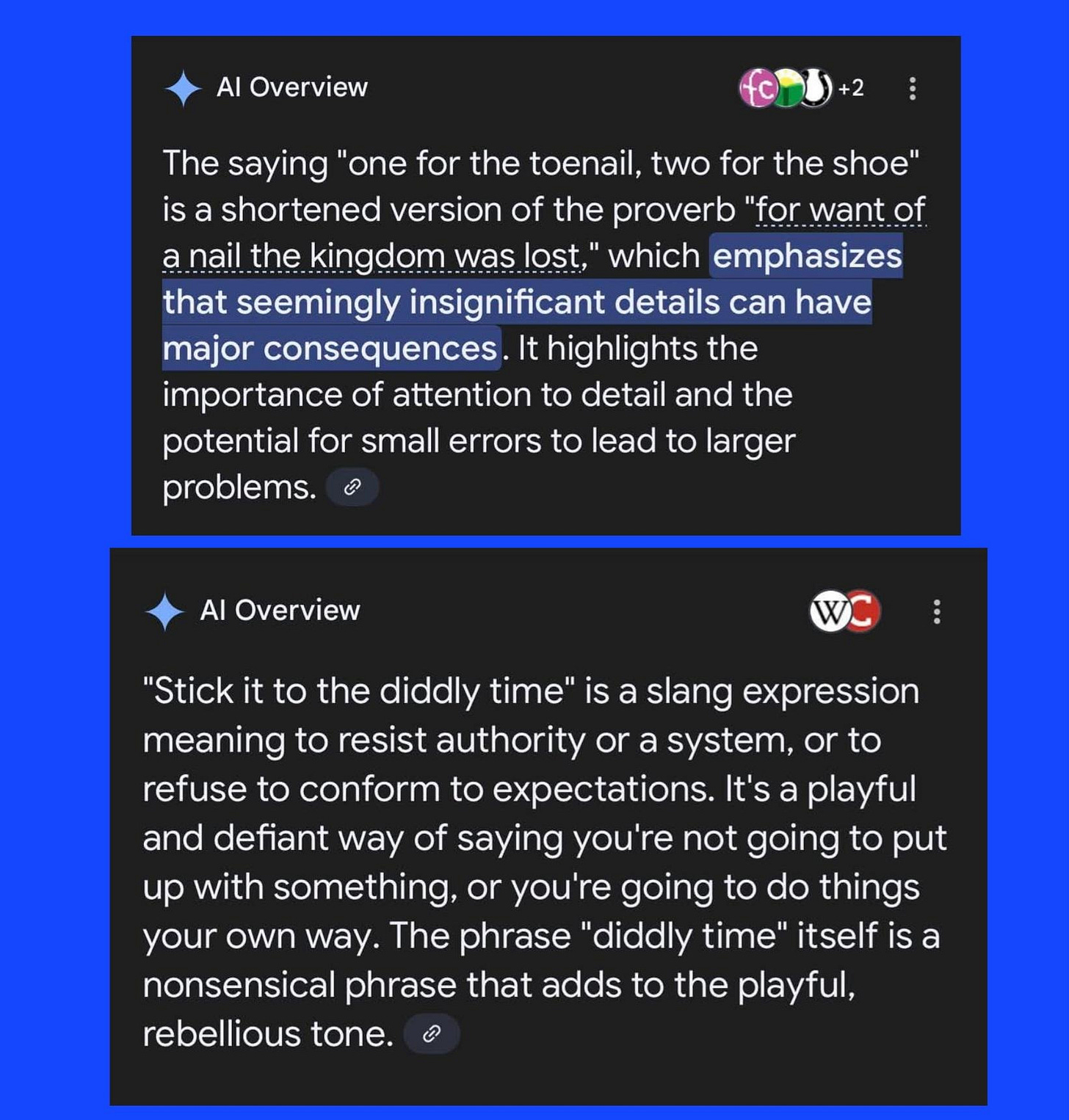

Image of the Week

Google’s integration of Gemini in its ”AI overview“ feature is leading to some unintended results. Like this Reddit user discovering that if you type in any phrase and add “meaning” at the end, Gemini will come up with something seeming plausible-sounding.

Exciting news out there?

A 27-year old French woman felt ill and described her symptoms to ChatGPT, which said she had blood cancer. At first she ignored the result, but doctors later confirmed the diagnosis. [Source]

Feedback?

Thanks for reading, and feel free to respond directly with suggestions for improvement, tips, and anything in between :)